Learning goals

Are you ineresting in learining the latest topics in Large Language Models (LLM), Retrieval-Augmented Generation (RAG), and Multi-Modality. We are organize this summer study group to keep you at the forefront of these cutting-edge technologies and enhance your knowledge and skills in these rapidly evolving fields.

By attending this study group, you will:

Gain the understanding of Large Language Models and their applications.

Learn about Retrieval-Augmented Generation and how it enhances information retrieval and generation processes.

Understand Vector databases and the concept of similarity search and how vector databases enable efficient nearest neighbor search to find similar items based on vector representations.

Explore the world of Multi-Modality and understand how combining different modes of data can lead to more powerful and versatile AI solutions.

Practical information

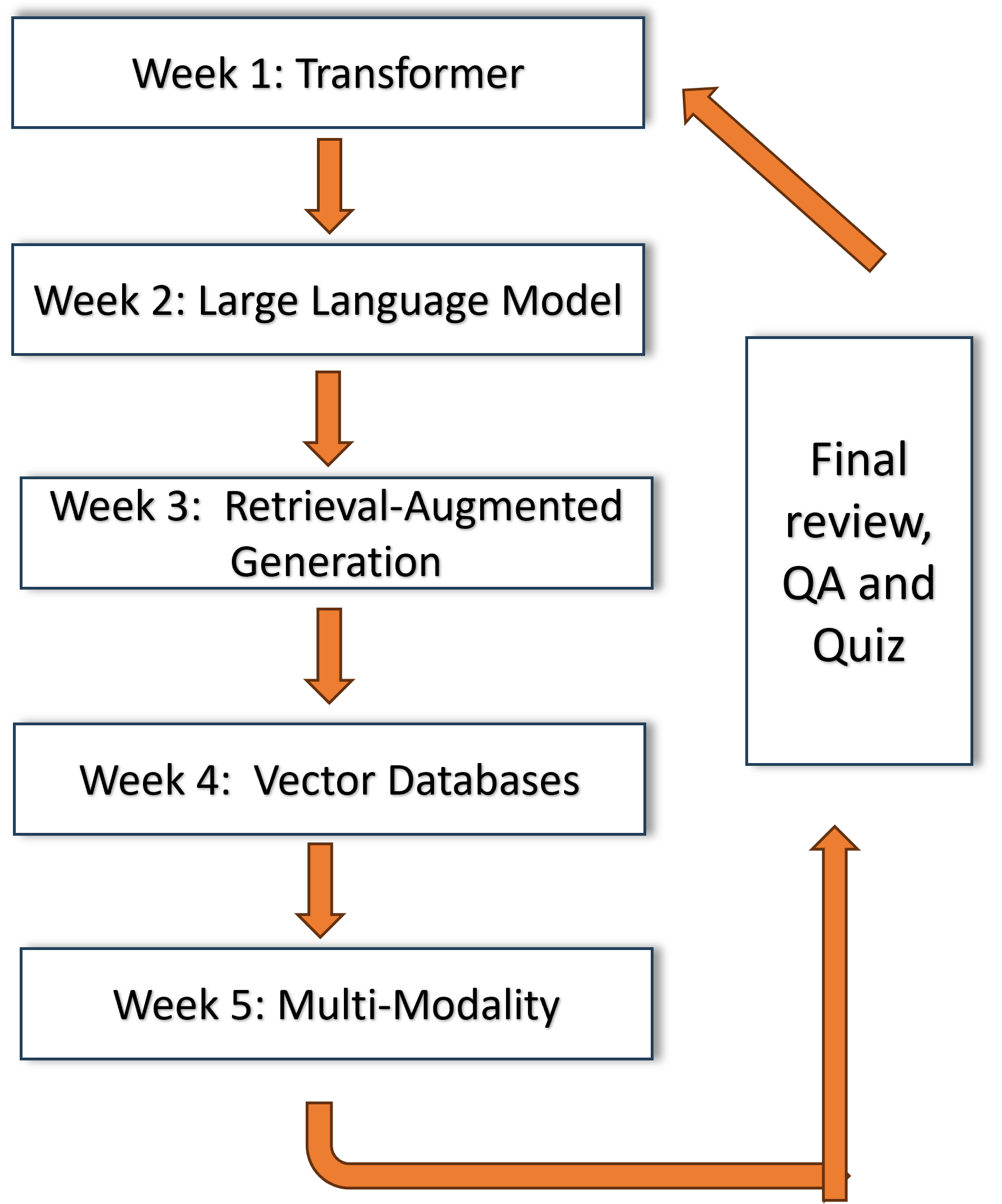

This online study group will be conducted via Zoom, with sessions held every Wednesday from 10:30 AM to 12:00 PM, starting July 31, 2024, and concluding on August 29, 2024. The final review and quiz session will take place on August 29th. In response to requests from students at other universities, we have decided to hold the final review meeting online as well.

Please register using the following link by July 15, 2024.

Cost: The attendance of this study group is free to everyone.

Call for volunteer speakers

In each session, we need a volunteer speaker to give a 30-minute talk summarizing the three papers listed in the references. Volunteer speakers can be Master's students, doctoral students, postdoctoral researchers, or senior researchers. If you are interested in volunteering as a speaker, please indicate your intention in the registration form . This is a great opportunity to enhance your presentation skills, share your insights, and contribute to the group's learning.Schedule

| Topics | Time | Speakers | Learning outcomes | Reference 1 | Reference 2 | Reference 3 |

|---|---|---|---|---|---|---|

| Transformer | 10.30-12.00, 31.07.2024, Wednesday | Ziqi kang , Download Slides, View recorded presentation |

|

Attention is All You Need This paper was the foundation for LLMs with the introduction of the Transformer architecture. | The Annotated Transformer This paper present an annotated version of the above paper in the form of a line-by-line implementation. This document itself is a working notebook, and should be a completely usable implementation. | A Comprehensive Analysis of T5, BERT, and GPT This article introduces early NLP techniques: word embeddings and compares T5, BERT and GPT models. |

| Large Language Models | 10.30-12.00, 07.08.2024, Wednesday | Lauri Seppalainen , Guanghan Wu . Slides: Part 1 , Part 2 . View recorded presentation |

| Prompt Design and Engineering: Introduction and Advanced Methods This paper introduces core concepts of prompts and prompt engineering, advanced techniques like Chain-of-Thought and Reflection, and the principles behind building LLM-based agent. | Scaling Laws for Neural Language Models This paper studied empirical scaling laws for language model performance on the cross-entropy loss. | Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey Parameter Efficient Fine-Tuning (PEFT) provides a practical solution by efficiently adapt the large models over the various downstream tasks by minimizing the number of additional parameters introduced or computational resources required. This paper presents comprehensive studies of various PEFT algorithms, examining their performance and computational overhead. |

| Retrieval-Augmented Generation | 10.30-12.00, 14.08.2024, Wednesday | Harinda Samarasekara . Download slides . View recorded presentation . |

|

Retrieval-Augmented Generation for Large Language Models: A Survey This survey paper offers a detailedexamination of the progression of RAG paradigms for the Naive RAG, the Advanced RAG, and the Modular RAG. | Similarity is Not All You Need: Endowing Retrieval Augmented Generation with Multi Layered Thoughts This paper argues that similarity is not always the panacea for retrieval augmented generation and totally relying on similarity would sometimes degrades the performance of RAG. They propose MetRag, a Multi-layEred Thoughts enhanced Retrieval Augmented Generation framework. | LangChain Library (GitHub) - This library is aimed at assisting in the development of those types of applications, such as simple LLM, Chatbots and other Agents. You can read the documentation here |

| Vector databases | 10.30-12.00, 21.08.2024, Wednesday | Valter Uotila and Quy Anh Nguyen. Download Slides: Part 1 , Part 2 . View recorded presentation . |

|

When Large Language Models Meet Vector Databases: A Survey This survey explores the synergistic potential of Large Language Models and Vector Databases. | Vector database management systems: Fundamental concepts, use-cases, and current challenges This paper provides an introduction to the fundamental concepts, use-cases, and current challenges associated with vector database management systems. | Vector Database Management Techniques and Systems This tutorial paper reviews the existing vector database management techniques for queries, storage and indexing. |

| Multi-Modality | 10.30-12.00, 28.08.2024, Wednesday | Lidia Pivovarova, Qinhan Hou, Huaiwu Zhang . Download Slides: Part 1 , Part 2 , Part 3 . |

|

Foundations and Recent Trends in Multimodal Machine Learning: Principles, Challenges, and Open Questions This paper defines three key principles of modality heterogeneity, connections, and interactions and propose a taxonomy of six core technical challenges: representation, alignment, reasoning, generation, transference, and quantification for multimodal machine learning. | Deep Multimodal Data Fusion This paper proposes a fine-grained taxonomy grouping the fusion models into five classes: Encoder-Decoder methods, Attention Mechanism methods, Graph Neural Network methods, Generative Neural Network methods, and other Constraint-based methods. | LANISTR: Multimodal Learning from Structured and Unstructured Data This paper proposes an attention-based framework to learn both unstructured data (image, text) and structured data (tabular and time series data). It learns a unified representation through joint pretraining on all the available data with significant missing modalities. |

| Final review and Quiz | 9.30-11.00, 29.08.2024, Thursday | Chunbin Lin , Putian Zhou | Two Keynote Presentations on Industry Applications of Large Language Models (LLMs) through Zoom meeting |

Keynote Presentations on Industry Applications of Large Language Models (LLMs) (29.08.2024)

Talk 1:

Title: Visa Genai Platform - Why, What and How

Speaker: Dr. Chunbin Lin

Time: 9:30-10.00 AM , 29.08.2024 (Helsinki time zone)

Abstract: Visa Genai Platform is a secure and scalable service allowing users to query various LLMs and build LLM applications on top of it. It integrates OpenAI models, e.g., GPT-4, GPT-4o, Text-embedding-ada-002, Antropic models, e.g., Claude-3.5-Sonnet, and open-source models, e.g., Mistral 7B. It also offers RAG (Retrieval-Augmented Generation) as a service and Agent as a service. In this talk, I will introduce why we build it, what are the challenges and how we resolve them.

Bio: Chunbin Lin is a senior staff software engineer at Visa, where he leads the Visa GenAI Platform team. Prior to joining Visa, Chunbin held key roles at Amazon AWS, IBM, and Informatica, where he contributed to various advanced projects, including leading the Amazon Redshift workload management team. He earned his Ph.D. in computer science from the University of California, San Diego (UCSD). Chunbin's research interests bridge the fields of distributed systems and machine learning, focusing on (i) applying machine learning techniques to optimize system performance and (ii) developing high-performance machine learning platforms. He has published over 40 papers in top conferences and journals such as SIGMOD, VLDB, and PVLDB, and has served on the program committees for numerous top conferences, including SIGMOD, VLDB, and ICDE.

Talk 2:

Title: LLM applications in a startup business

Speaker: Dr. Putian Zhou

Time: 10:00-10.30 AM , 29.08.2024 (Helsinki time zone)

Abstract: We will introduce two application cases. One is how to improve and extend a traditional service system with the LLMs. Another one is how to make a chatbot matching the requirements of a business company.

Bio: Putian Zhou graduated from the INAR (Institute for Atmospheric and Earth System Research) at the University of Helsinki in 2018, then Putian continued working in research and teaching in INAR. His research topic is to use numerical models to simulate atmospheric chemistry and aerosol processes, applying them to the analysis of climate change in both paleo and future climates. In 2024, during his spare time, Putian co-founded an AI technology company Aitomore with a few friends. They are now collaborating with various businesses, mainly the food service market and online retailers.

Presentation Videos:

.jpg)

This event is connected with University Cooperation Initiative Nordforsk NUEI project .

More information and questions, please contact Prof. Jiaheng Lu , Department of Computer Science, University of Helsinki Email : Jiaheng.lu.at.helsinki.fi